From Zero to Quantum

The quantum computing revolution is here.

What is it and how will Post-Quantum Cryptography

impact digital trust?

What Is Quantum Computing?

Quantum computing is a quickly developing technology that combines quantum mechanics with advanced mathematics and computer engineering to solve problems that are too complex for classical computers. Because quantum computing operates on fundamentally different principles than classical computing, using fundamentally different machines, Moore’s Law doesn’t apply. The incredible and rapidly increasing power and capabilities of quantum computing are already changing how we use computers to solve problems, analyze information, and protect data.

Why Do We Want Quantum Computing?

Today, even the most advanced supercomputers in the world run calculations based on transistor binaries and computing principles that date back to the invention of computers more than a century ago. Many problems involve complexities with variables that can’t be calculated based on this classical computing model.

Because quantum computing runs on quantum variabilities, these complex problems can be calculated as quickly as a classical computer might solve a classical problem.

Quantum computing opens the door for solving variabilities with great nuance in non-traditional ways. Quantum computing scientists see opportunities for great benefits in systems that are highly complicated and involve seemingly random factors, like weather modeling, medicine and chemistry, global finance, commerce and supply transport, cybersecurity, true Artificial Intelligence—and, of course, quantum physics.

What Makes Quantum Computing So Powerful?

Even the fastest and most powerful

supercomputers function on brute force

calculations. They “try” every possible outcome,

in a linear pathway, until one outcome proves

the solution.

By contrast, quantum computers can “skip over”

that linear journey through every pathway by using

quantum mechanics to simultaneously consider

all possible outcomes. Quantum computing works

with probabilities rather than binaries. This form of

computing allows for solutions to problems that

are too large or too complex to solve in any

reasonable time by a classical computer. Where a

classical computer can sort through and catalog

large amounts of data, it can’t predict behavior

within that data.

The probability characteristics of quantum

computing offer the ability to consider all

potentialities of the entire data set and arrive at a

solution when it comes to the behavior of an

individual piece of data within the massive,

complex group.

Just as classical computing excels in certain types

of calculations but not in others, quantum

computing is great at particular computing

applications but not all computing. Most experts

agree that classical supercomputers and quantum

computers will complement each other, with each

performing extremely powerful calculations in

different applications using the unique computing

characteristics of each.

How Does a Quantum Computer Work?

An understanding of quantum computing relies on an understanding of the principles

dictating the behavior of quantum movement, position, and relationships.

Superposition

At the quantum level, physical systems can exist in multiple states at the same time. Until the system is observed or measured, the system occupies all positions at once. This central principle of quantum mechanics allows quantum computers to work with the potential of the system, where all possible outcomes exist in a computation simultaneously. In the case of quantum computing, the systems used can be photons, trapped ions, atoms, or quasi-particles.

Interference

Quantum states can interfere with other quantum states. Interference can take the form of canceling out amplitude or boosting amplitude. One way to visualize interference is to think of dropping two stones in a pool of water at the same time. As the waves from each stone cross paths, they will create stronger peaks and valleys in the ripples. These interference patterns allow quantum computers to run algorithms that are entirely different from those of classical computers.

Entanglement

At the quantum level, systems like particles become enjoined, mirroring the behavior of one another, even at great distances. By measuring the state of one entangled system, a quantum computer can “know” the state of the other system. In practical terms, for example, a quantum computer can know the spin motion of electron B by measuring the spin of electron A, even if electron B is millions of miles away.

Qubits

In classical computing, calculations are made combinations of binaries known as bits. This is the basis of the limitations of classical computing: calculations are written in a language that can only have one of two states at any given time – 0 or 1. With quantum computing, calculations are written in the language of the quantum state, which can be 0 or 1, or any proportion of 0 or 1 in superposition. This type of computational information is known as a “quantum computer bit,” or Qubit.

Qubits possess characteristics that allow information to increase exponentially within the system. With multiple states operating simultaneously, qubits can encode massive amounts of information—far more than a bit. For this reason, it’s difficult to overstate the computing power of quantum. Increases in the computing power of combined qubits grows much more rapidly than in classical computing, and because qubits don’t take up physical space like processing chips, it’s much easier to arrive at infinite computing capabilities, by some measurements.

Types of Quantum Computers

An understanding of quantum computing relies on an understanding of the principles dictating the behavior of quantum movement, position, and relationships.

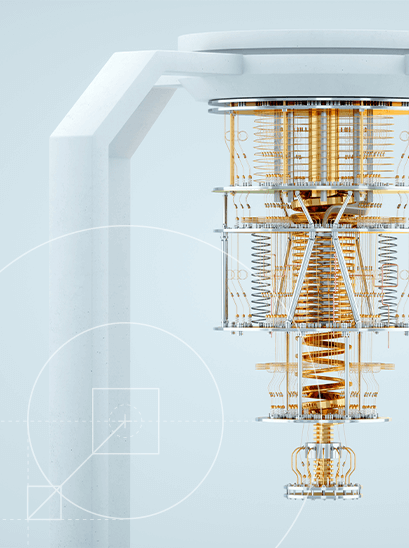

Regardless of type, quantum computing hardware is very different from server farms associated with supercomputing. Quantum calculation requires placing particles into conditions where they can be measured without alteration or disruption by surrounding particles. In most cases, this means cooling the computer itself to near Absolute Zero and shielding Qubit particles from noise using layers of gold. Because current quantum computers require such delicate and precise conditions, they must be built and constructed in highly specialized environments.

How Will Quantum Computers Be Used?

When we look at the application of quantum computing in the real world, it’s important to remember that this is an emerging field. At the moment, quantum computing is very much in its nascent stage, with existing quantum computers that are severely limited by the current state of the art. That said, quantum computing researchers and engineers agree that advancements are outpacing expectations. What we do with quantum computing will certainly change or evolve as the technology develops, but there are already promising areas of application.

When Will Quantum Computers Be Widely Used?

Despite the rapid advancement and a great potential for impact, functional quantum computing is currently mostly theoretical. Quantum computers capable of doing the kinds of calculations and modeling at the scale of true quantum possibility are years away. Just how many years? Nobody is quite sure. Still, continuing progress in the field mean it’s very possible we’ll see useful quantum computers sooner rather than later.

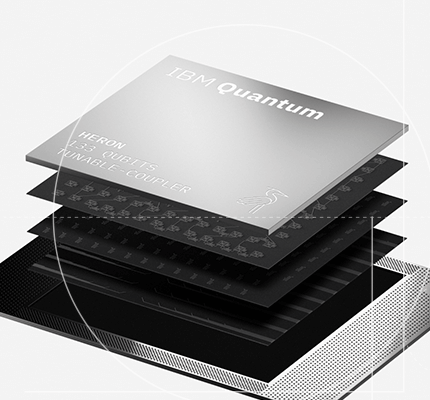

In 2023, IBM, one of the world leaders in quantum computing, announced they had achieved 133-qubit processing with their quantum Heron chip. IBM is working to couple three Heron processors together in 2024. One Heron chip is capable of running 1800 gates, with low error and high performance. IBM has published a roadmap for reaching their goal of error-corrected quantum computing by 2029.

The vast majority of quantum experts believe useful quantum computing will be achieved in the commercial space within a decade, if not sooner. Nation states may achieve quantum earlier.

The History of Quantum Computing and PQC

What Are the Security Implications of Quantum Computing?

Today, one of the major forms of digital encryption is RSA (Rivest-Shamir-Adleman), known generally as public key cryptography. The RSA algorithm was first described by Rivest, Shamir, and Adleman in 1977, and even decades later, it remains an exceptionally strong, proven system for encryption.

RSA is based on two digital keys which combine to form a large prime number. Where classical computers can easily multiply two known numbers to calculate a prime product, they’re very poor tools in the reverse. Classical computers struggle to use brute force binary calculation to derive two factors from the prime product. In short, current RSA algorithms are essentially unbreakable codes, because even the most powerful supercomputers can’t calculate the value of the keys in any reasonable amount of time. Today’s 2048-bit RSA encryption would take the fastest supercomputer roughly 300 trillion years to decrypt.

That’s where the threat of quantum computing comes into play. Because quantum computers can analyze all probabilities at once without tracing a linear path, they can effectively “skip over” the one-route-at-a-time method of classical computers and arrive at an accurate calculation in a reasonable amount of time. Quantum computers are perfectly equipped to divide large prime numbers into correct factors, effectively breaking RSA. Predictions about near-future quantum computing suggest RSA encryption can be cracked in months, and more advanced quantum computers may be able to decrypt RSA in hours or even minutes.

What Are the Current Risks?

Cybersecurity experts have focused not only on the threat posed to data in the future, once quantum reaches the point of common usefulness, but also on threats to current data.

Harvest now, decrypt later

In anticipation of quantum capability, governments and cybercriminals may practice “harvest now, decrypt later.” This is when data is stolen and stored in its encrypted state, hoping that soon, the bad actor can decrypt the stored data when they have access to a usable quantum computer. Even older data may contain parcels of information critical to operations for governments and companies, as well as private information on users, customers, health patients, and more.

What is Post-Quantum Cryptography (PQC)?

Although truly functional quantum computers may be years away, the potential for digital disruption in combination with “harvest now, decrypt later” poses a massive risk to data integrity. The world’s leading cybersecurity organizations and experts are already at work on developing security measures that will protect data against quantum decryption now and in the future.

Post-Quantum Cryptography, known as PQC, is a cryptographic system that protects data against decryption efforts by both classical and quantum computers.

The goal of PQC is to not only secure against quantum computers in the future, but to operate seamlessly with today’s protocols and network systems. Successfully implemented PQC countermeasures will integrate with current systems to protect data against all forms of current and future attack, regardless of the type of computer used.

While quantum computers are still in their infancy, cybersecurity experts have already created PQC algorithms that can protect against quantum attacks. These security tools will continue to evolve along with quantum computing, but current protections are equipped to stay ahead of quantum threats when properly implemented.

NIST Recommendations

The National Institute of Standards and Technology has already crafted recommendations for the use of PQC in anticipation of quantum computing threats. These include:

- Establish a Quantum-Readiness Roadmap

- Engage with technology vendors to discuss post-quantum roadmaps

- Conduct an inventory to identify and understand cryptographic systems and assets

- Create migration plans that prioritize the most sensitive and critical assets

Quantum Computing vs. Quantum Cryptography vs. Post-Quantum Cryptography

Overlapping terms and algorithms can lead to a misunderstanding of the technology and the associated threats.

Quantum computing

Possibly the most misused term in quantum computing is “Post-Quantum Computing,” abbreviated as “PQC.” This term has led to confusion, because it shares its abbreviation with “Post-Quantum Cryptography.” However, “Post-Quantum Computing” doesn’t exist in the world of quantum computer science. A quantum computer is the full term for the machine, and quantum computing describes the field and the process. Even long after advanced, highly useful machines exist, they will still be quantum computers, not post-quantum computers.

Quantum cryptography

Quantum cryptography shares its basis in quantum mechanics with Post-Quantum Cryptography, but it is not the same cryptographic technology as PQC. In quantum cryptography, the fundamental nature of unpredictability is used to encrypt and decrypt data, with information directly encoded in qubits themselves. Currently, the most commonly known version of quantum encryption uses the properties of qubits to secure data in a way that would produce qubit errors if someone tries to decrypt the information without permission. This form of quantum encryption works more like an alarm sensor on a door or window. Unauthorized access raises an alarm.

Post-Quantum Cryptography (PQC)

Post-Quantum Cryptography operates on mathematical equations, just like classical computing encryption. The difference is in the complexity of the equations. In PQC, the math takes advantage of quantum properties to create equations so difficult to solve, even quantum computers can’t “skip” to the correct solution. One of the benefits of PQC is its basis in highly unsolvable equations. Because it shares the same basic structure as current classical encryption, it can be deployed using similar methods as current state-of-the-art encryption, and it can protect much of today’s systems.

Current PQC standards

Quantum cryptographers have developed several sets of algorithms that address quantum threats.

The sets vary according to performance operations. Some systems can handle more intensive PQC

problems while others need a solution that doesn’t heavily strain resources. And, as with other forms of

classical encryprion, different sets of PQC apply to different use cases. Three sets are currently

considered strong PQC.

CRYSTALS-Kyber

Kyber is based on a standard NIST calls Module-Lattice-Based Key-Encapsulation Mechanism (ML-KEM). It is an asymmetric cryptosystem that functions on the module learning with errors problem (M-LWE). Kyber has been applied to key exchange and public key encryption as a quantum defense version of TLS/SSL for secure websites.

CRYSTALS-Dilithium

Dilithium is also a lattice-based scheme, built from the Fiat-Shamir with Aborts technique. It is a shortest integer solution set. The nature of the Dilithium algorithm makes it the smallest public key-signature size for lattice-based schemes. NIST has recommended Dilithium as a PQC solution for digital signatures.

SPHINCS+

SPHINCS+ is a hash-based digital signing set that uses HORST and W-OTS to secure against quantum attacks. This basis gives SPHINCS+ the advantage of short public and private keys, although its signature is longer than Dilithium and Falcon. SPHINCS+ is covered in FIPS 205.

FALCON

Falcon is a lattice-based digital signing solution based on a hash-and-sign method. The name is an acronym for Fast Fourier Lattice-based compact signatures over NTRU. The advantage of FALCON is a small public key and a small signature.

Learn More With PQC for Dummies

Quantum computing is reshaping the future of cybersecurity—are you ready?

Get up to speed with Post-Quantum Cryptography For Dummies, DigiCert Special Edition. This easy-to-read guide breaks down complex quantum concepts and helps you prepare your organization for the transition to quantum-safe security.

Inside, you’ll discover:

- How quantum computing threatens today’s encryption

- Steps to future-proof your cryptographic strategy

- A roadmap for adopting post-quantum cryptography